Make a Gif of a Website’s Evolution

lundi 3 décembre 2018 à 20:19For the latest StackExchange “time”-themed contest, I made a gif showing the evolution of StackOverflow from 2008 to today:

(click on the image to play it again)

The first step was to find a decent API for the Internet Archive. It

supports Memento, an HTTP-based protocol defined in the RFC 7089 in

2013. Using the memento_client wrapper, we can get the closest snapshot

of a website at a given date with the following Python code:

from datetime import datetime, timedelta

from memento_client import MementoClient

mc = MementoClient(timegate_uri="https://web.archive.org/web/",

check_native_timegate=False)

def get_snapshot_url(url, dt):

info = mc.get_memento_info(url, dt)

closest = info.get("mementos", {}).get("closest")

if closest:

return closest["uri"][0]

# As an example, let’s look at StackOverflow two weeks ago

url = "https://stackoverflow.com/"

two_weeks_ago = datetime.now() - timedelta(weeks=2)

snapshot_url = get_snapshot_url(url, two_weeks_ago)

print("StackOverflow from ~2 weeks ago: %s" % snapshot_url)

Don’t forget to install the memento_client lib:

pip install memento_client

Note this gives us the closest snapshot, so it might not be exactly two weeks ago.

We can use this code to loop using an increasing time delta in order to get snapshots at different times. But we don’t only want to get the URLs. We wants to make a screenshot of each one.

The easiest way to programmatically take a screenshot of a webpage is probably to use Selenium. I used Chrome as a driver; you can either download it from the ChromeDriver website or run the following command if you’re on a Mac with Homebrew:

brew install bfontaine/utils/chromedriver

We also need to install Selenium for Python:

pip install selenium

The code is pretty short:

from selenium import webdriver

def make_screenshot(url, filename):

driver = webdriver.Chrome("chromedriver")

driver.get(url)

driver.save_screenshot(filename)

driver.quit()

url = "https://web.archive.org/web/20181119211854/https://stackoverflow.com/"

make_screenshot(url, "stackoverflow_20181119211854.png")

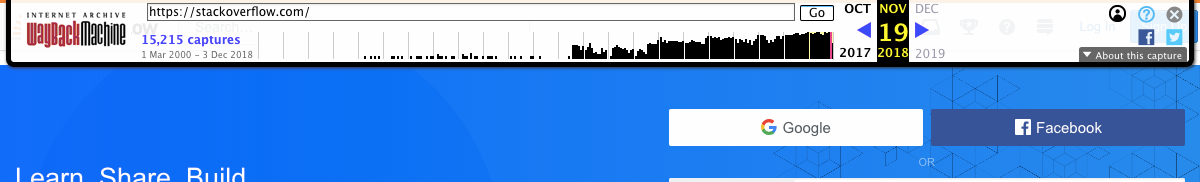

If you run the code above, you should see a Chrome window open, go at the URL

by itself, then close once the page is fully charged. You now have a screenshot

of this page in stackoverflow_20181119211854.png! However, you’ll quickly

notice the screenshot includes the Wayback Machine’s header over the top of the

website:

This is handy when browsing through snapshots by hand, but not so much when we access them from Python.

Fortunately, we can get a header-less URL by changing it a bit: we can

append id_ to the end of the date in order to get the page exactly as it was

when the bot crawled it. However, this means it links to CSS and JS files that

may not exist anymore. We can get a URL to an archived page that has been

slightly modified to replace links with their archived version using im_

instead.

- Page with header and rewritten links:

https://web.archive.org/web/20181119211854/...- Original page, as it was when crawled:

https://web.archive.org/web/20181119211854id_/...- Original page with rewritten links:

https://web.archive.org/web/20181119211854im_/...

Re-running the code using the modified URL gives us the correct screenshot:

url = "https://web.archive.org/web/20181119211854im_/https://stackoverflow.com/"

make_screenshot(url, "stackoverflow_20181119211854.png")

Joining the two bits of code we can make screenshots of a URL at different intervals. You may want to check the images once it’s done to remove inconsistencies. For example, the archived snapshots of Google’s homepage aren’t all in the same language.

Once we have all images, we can generate a gif using Imagemagick:

convert -delay 50 -loop 1 *.png stackoverflow.gif

I used the following parameters:

-delay 50: change frame every 0.5s. The number is in 100th of a second.-loop 1: loop only once over all the frames. The default is to make an infinite loop but it doesn’t really make sense here.

You may want to play with the -delay parameter depending on how many images

you have as well as how often the website changes.

I also made a version with Google (~10MB) at 5 frames per second,

with -delay 20. I used the same delay

as the StackOverflow gif: at least 5 weeks between each screenshot. You

can see which year the screenshot is from by looking at the bottom of each

image.